首先,我想與大家分享一個故事。 時鐘撥回到兩億年前, 我們的故事, 與新皮層 (neocortex) 有關。 早期哺乳動物 (實際上只有哺乳動物才有新皮層) 比如齧齒類動物, 擁有一種尺寸和厚度與郵票相當的新皮層, 它像一層薄膜, 包覆著這些動物核桃大小的大腦。 新皮層的功能不可小覷, 它賦予動物新的思考能力。 不像非哺乳類動物, 牠們的行為基本上固定不變, 擁有新皮層的哺乳動物能發明新的行為。 比如,老鼠逃避天敵的追捕時, 一旦發現此路不通, 牠會嘗試去找新的出路。 最終可能逃之夭夭,也可能落入貓口, 但僥倖成功時,牠會記取成功的經驗, 最終形成一種新的行為。 值得一提的是,這種新近習得的行為, 會迅速傳遍整個鼠群。 我們可以想像,一旁觀望的老鼠會說: “哇,真是急中生智,居然想到繞開石頭來逃生!” 然後,輕而易舉也掌握了這種技能。

但是,非哺乳動物 對此完全無能為力, 牠們的行為一成不變。 準確地說,牠們也能習得新的行為, 但不是在一朝一夕之間, 可能需要歷經一千個世代, 整個種群才能形成一種新的固定行為。 在兩億年前的蠻荒世界, 這種進化節奏並無大礙。 那時,環境變遷步履蹣跚, 大約每一萬年, 才發生一回滄海桑田的巨變, 在這樣一個漫長的時間跨度裏, 動物才形成了一種新的行為。

往後,一切安好。 直到,禍從天降。 時間快進到6500萬年前, 地球遭遇一場突如其來的環境遽變, 後人稱之為“白堊紀物種大滅絕”。 恐龍遭受滅頂之災; 75%的地球物種 走向滅絕; 而哺乳動物 趁機佔領了其他物種的生存地盤。 我們可以假託這些哺乳動物的口吻, 來評論這一進化過程: “唔,關鍵時候我們的新皮層真派上用場了。” 此後,新皮層繼續發育。 哺乳動物個頭也日漸見長, 大腦容量迅速擴大, 其中新皮層的發育堪稱突飛猛進, 已經逐步形成獨特的溝回和褶皺, 這可以進一步增加其表面積。 人類的新皮層, 如果充分展開平鋪, 尺寸可達一張餐巾大小。 但它仍然保持了纖薄的結構, 厚度也與餐巾不相上下。 外形曲折複雜,呈現千溝萬壑, 新皮層已佔據大腦體積的80%左右, 不僅肩負思考的重任, 還約束和昇華個人的行為。 今天,我們的大腦 仍然製造原始的需求和動機。 但是,對於我們內心狂野的征服欲望, 這個新皮層起著春風化雨、潤物無聲的作用, 最終將這種欲望化作創造詩歌、開發APP、 甚至是發表TED演講這樣的文明行為。 對於這一切, 新皮層功不可沒。

50年前,我完成了一篇論文, 探究大腦的工作原理, 我認為大腦是一系列模塊的有機結合。 每個模塊按照某種模式各司其職, 但也可以學習、記憶新的模式, 並將模式付諸應用。 這些模式以層級結構進行組織, 當然,我們借助自己的思考 假設了這種層級結構。 50年前,由於各種條件限制, 研究進展緩慢, 但這項成果使我獲得了 約翰遜總統的接見。 50年來,我一直潛心研究這個領域, 就在一年半前,我又發表了一部新的著作 ——《心智的構建》。 該專著探討了同一個課題, 幸運的是,我現在擁有充足的證據支撐。 神經科學為我們貢獻 大量有關大腦的數據, 還在以逐年翻倍的速度劇增; 各種腦部掃描技術的空間解析度, 也在逐年翻倍。 現在,我們能親眼窺見活體大腦的內部, 觀察單個神經間的連接, 目睹神經連接、觸發的實時發生。 我們親眼看到大腦如何創造思維, 或者反過來說,思維如何增強和促進大腦, 思維本身對大腦進化至關重要。

接下來,我想簡單介紹大腦的工作方式。 實際上,我統計過這些模塊的數量。 我們總共有大約三億模塊, 分佈在不同的層級中。 讓我們來看一個簡單的例子。 假設我有一組模塊, 可以識別大寫字母“A”中間的短橫線, 它們的主要職責就在於此。 無論周遭播放著美妙的音樂, 還是一位妙齡女郎翩然而至, 它們都渾然不覺。但是,一旦發現“A”的短橫線, 它們就興奮異常,異口同聲喊出:“短橫線!” 同時,它們立即報告神經軸突, 識別任務已經順利完成。 接下來,更高級別的模塊—— 概念級別的模塊,將依次登場。 級別越高,思考的抽象程度越高。 例如,較低的級別可識別字母“A”, 逐級上升後,某個級別能識別“APPLE”這個單詞。 同時,信息也在持續傳遞。 負責識別“APPLE”的級別,發現A-P-P-L時, 它會想:“唔,我猜下一個字母應該是E吧。” 然後,它會將信號傳達到 負責識別“E”的那些模塊, 並發出預警:“嘿,各位注意, 字母E就要出現了!” 字母“E”的識別模塊於是降低了閥值, 一旦發現疑似字母,便認為是“E”。 當然,這並非通常情況下的處理機制, 但現在我們正在等待“E”的出現, 而疑似字母與它足夠相似, 所以,我們斷定它就是“E”。 “E”識別後,“APPLE”識別成功。

如果我們再躍升五個級別, 那麼,在整個層級結構上, 就到達了較高水平。 這個水平上,我們具有各種感知功能, 某些模塊能夠感知特定的布料質地, 辨識特定的音色,甚至嗅到特定的香水味, 然後告诉我:妻子剛進到房间!

再上升10級, 我們就到達了一個很高的水平, 可能來到了額葉皮層。 在這兒,我們的模塊已經能夠臧否人物了, 比如:這事有點滑稽可笑!她真是秀色可餐!

大家可能覺得,這整個過程有點複雜。 實際上,更讓人費解的是 是這些過程的層級結構。 曾經有位16歲的姑娘,當時正接受腦部手術。 由於手術過程中醫生需要跟她講話, 所以就讓她保持清醒。 保持清醒的意識,這對於手術並無妨礙, 因為大腦內沒有痛覺感受器。 我們驚奇地發現,當醫生刺激新皮層上 某些細小區域時,就是圖中的紅色部位, 這個姑娘就會放聲大笑。 起初,大家以為, 可能是因為觸發了笑反應神經。 他們很快意識到事實並非如此, 這些新皮層上的特定區域能夠理會幽默, 只要醫生刺激這些區域, 她就會覺得所有的一切都滑稽有趣。 “你們這幫人光站在那裏,就讓人想笑。” 那位姑娘典型的解釋道。 我們知道,這個場景並不滑稽可笑, 因為大家都在進行緊張的手術。

現在,我們又有哪些新的進展呢? 計算機日益智能化, 利用功能類似新皮層的先進技術, 它們可以學習和掌握人類的語言。 我曾描述過一種算法, 與層級隱含式馬爾可夫模型類似, (馬爾可夫模型是用於自然語言處理的統計模型) 上世紀90年以來我一直研究這種算法。 “Jeopardy”(危境)是一個 自然語言類的智力競賽節目, IBM研發的沃森計算機在比賽中 勇奪高分,總分超過兩名最佳選手的總和。 連這個難題都被它輕鬆化解了: “定義:由起泡的派餡料發表的冗長而乏味的演講。 請問:這定義的是什麼?” 它迅速回答道:愛開腔的蛋白霜。 而詹尼斯和另外一名選手卻一頭霧水。 這個問題難度很大,極富挑戰性, 向我們展示了計算機 正在掌握人類的語言。 實際上,沃森是通過廣泛閱讀維基百科 及其他百科全書來發展語言能力的。

5至10年以後, 我們的搜索引擎 不再只是搜索詞語和鏈接這樣的簡單組合, 它會嘗試去理解信息, 通過涉獵浩如煙海的互聯網和書籍, 攫取和提煉知識。 想像有一天,你正在悠閒地散步, 智能設備端的 Google 助理突然和你說: “瑪麗,你上月提到,正在服用的谷胱甘肽補充劑 因為無法透過血腦屏障,所以暫時不起作用。 告訴你一個好消息!就在13秒鐘前, 一項新的研究成果表明, 可以透過一个新的途徑來補充谷胱甘肽。 讓我給你概括一下這個報告。”

20年以後,我們將迎來奈米機器人, 目前,科技產品正在日益微型化, 這一趨勢愈演愈烈。 科技設備將通過毛細血管 進入我們的大腦, 最終,將我們自身的新皮層 與雲端的人工合成新皮層相連, 使它成為新皮層的延伸和擴展。 今天, 智慧型手機都內置了一台計算機。 假如我們需要一萬台計算機, 在幾秒鐘內完成一次複雜的搜索, 我們可以通過訪問雲端來獲得這種能力。 到了2030年,當你需要更加強大的新皮層時, 你可以直接從你的大腦連接到雲端, 來獲得超凡的能力。 舉個例子,我正在漫步,遠遠看到一個人。 “老天,那不是克里斯.安德森(TED主持人)嗎? 他正朝我這邊走來。 我要抓住這個機遇,一鳴驚人! 但是,我只有三秒鐘, 我新皮層的三億個模塊 顯然不夠用。 我需要借來10億模塊增援!” 於是,我會立即連通雲端。 我的思考,綜合了生物體和非生物體 這兩者的優勢。 非生物部分的思考能力, 將受益於“加速回報定律”, 這是說,科技帶來的回報 呈指數級增長,而非線性。 大家是否還記得,上次新皮層大幅擴張時 發生了哪些重大變化? 那是200萬年前, 我們那時還只是猿人, 開始發育出碩大的前額。 而其他靈長類動物的前額向後傾斜, 因為牠們沒有額葉皮層。 但是,額葉皮層並不意味著質的變化; 而是新皮層量的提升, 帶來了額外的思考能力, 最終促成了質的飛躍。 我們因而能夠發明語言, 創造藝術,發展科技, 並舉辦TED演講, 這都是其他物種難以完成的創舉。

我相信未來數十年, 我們將再次創造偉大的奇蹟。 我們將借助科技,再次擴張新皮層, 不同之處在於, 我們將不再受到頭顱空間的局限, 意味著擴張並無止境。 隨之而來的量的增加 在人文和科技領域, 將再次引發一輪質的飛躍。

謝謝大家!

生物學、奈米科技、人工智慧結合成超人類是 Ray Kurzweil 的預測想法

據新浪科技報道,Ray Kurzweil有著一頭淡金髮和飽滿的前額,他有著長者特有的從容,回答問題不緊不慢,會將觀點和事例結合起來解釋令人費解的科技問題。他有五個頭銜——美國作家、電腦科學家、發明家、未來學家和谷歌工程主管,還擁有多達20個榮譽博士學位,以及來自美國總統的最高獎章。

在這五重身份中,他最喜歡的是谷歌技術主管,因為這個角色融合了他擁有的多種身份,同時能夠將技術帶向全世界。「谷歌擁有數十億用戶,因此我們所做的工作能對世界產生直接影響。」他說道。

對於這樣一個具有傳奇色彩的人物,《華爾街日報》稱其為「不知疲倦的天才」,《福布斯》贊其為「終極思考機器」。那麼,除了天賦異稟,他保持創造力、思維超前的秘訣又是什麼?

最想做的事是通過技術改變世界

Ray最為人所稱道的是未來學家身份,針對科技領域他所做的預測時間跨度長達數十年。他的有些預測雖然聽起來匪夷所思、超現實,但正是因為技術正呈指數級增長,未來的確難以想像。

而正如他所創辦的科技網站 Kurzweil Accelerating Intelligence 中一篇文章說的那樣,有些科技預測之所以能夠成真,不僅在於技術呈指數增長,也在於每當一個預測被拋出來,人們才會逐漸關注,不斷投入資金和人才等科研力量,最終促使該領域科技的突破。作為未來學家,Ray最想做的事情就是通過技術改善世界。而他也著書立說,向人們推廣科技的魅力,他的作品《The Age of Spiritual Machines》和《The Singularity Is Near》均是亞馬遜科技類銷量排名第一的書籍。

而他之所以能夠做出大膽超前又充滿價值的預測,在於他本身天賦過人、勤於思考,也在於他在科技領域的數十年的浸潤。不過,人們還是想知道他保持不竭動力、不斷思考、不斷創新的秘訣是什麼。

對此,他有這樣的逆向思維的訣竅:「我會想像5年後如果要發表一次演講,那麼怎麼解釋我的新發明。現在還沒有這樣的發明,但我會去想該說一些什麼,到底解決了什麼問題。隨後我會回頭看,如果未來的演講是這樣,那麼就反推現在要怎麼去做,步驟是怎樣的。這就是發明的方式。」

同時,他想和年輕人分享的經驗是:通過性價比指標去衡量信息技術,根據成本來研究該技術的競爭力。他指出,這樣考慮現實狀況的指標具有很強的可預測性,可以預測三五年後的事情,比如從這一角度思考通信和生物科技領域。

不過,Ray在30年前曾預測過30年後、50年後的事情。他坦言道,儘管不能預測一切,但至少可以預測未來的某些情況。

正在從事的工作是讓電腦理解語言

因為Ray本身是個創業者,所以很多人好奇為何他選擇為谷歌打工。但是源於谷歌能夠幫助他實現自己借助技術改變世界的目標,所以他欣然接受了這份工作。其實,Ray在谷歌的工作也是面向未來的工作:「我在谷歌的技術工作,以及我作為未來學家的身份,兩者之間並沒有實際的區別。因為我們設計面向2018年或2020年的技術,我們會思考未來的技術發展。」Ray正在帶領一個從事自然語言識別項目的團隊,旨在理解語言的涵義。一般而言,人們進行搜索都從關鍵字開始,找到包含該關鍵字的文檔,但搜尋引擎本身並不理解文檔內容的意思。而搜索技術更進一步的發展便是語義理解。

他指出,自然語言識別能力需要理解涵義,以及如何表達這個涵義。而困難在於,我們甚至並不知道對於自然語言而言,「涵義」的定義是什麼。「對於語音辨識,你會試圖去了解某人正在說什麼;對於字元識別,你會去判斷紙上的字母是什麼。不過,如果希望理解紙上內容的含義,那麼要如何去做?」Ray是說,我們需要對電腦進行自然語言識別建立框架。

Ray提出了一個完整的理論,關於人腦中的觀點是如何表達的,以此探討電腦理解語言。為此他最新寫了一部書《How to Create a Mind: The Secret of Human Thought Revealed》探討了人腦的思維,以及人腦中的想法如何表達。比如人們在翻譯過程中,會先知道語言是什麼含義,從而在大腦中創造出字元以反映這些含義,隨後再從另一種語言中尋找與之匹配的文字。不過,今天的語言識別並不是這樣,只是試圖匹配字元序列。

而由Ray帶領的Google Now團隊正在進行自然語言理解的開發工作。他表示,從長期來看,搜尋引擎將可以理解關鍵字的含義。「這是一個長期目標,而我們需要一步步的發展,讓電腦去理解語言。這是我正在從事的工作。」他如是說。

對未來科技的五大預測

Ray對未來科技做出了五大預測,包括人類基因重組、太陽能、3D列印技術、搜尋引擎和虛擬實境等領域。不過有些預測聽起來似乎難以理解,Ray做了詳細的解釋。

1.2020年人體可進行編程重組,屆時人類可遠離疾病、避免衰老。關於個人重新編碼生物信息,是指人類通過醫學介入,改變生物基礎的信息流程。對該預測,很多人持質疑態度,Ray的解釋如下:

比如,某種疾病的造成原因是某一基因的缺失。雖然大部分疾病並不是由某一基因引起的,但這種例外。對於這種疾病,人類可以通過替代這一基因進行治療。這方面的嘗試已經在實踐進行中。通過從身體中提取細胞,在體外添加基因,在顯微鏡下監控過程的進行,對數百萬個細胞進行基因替代,隨後將其注入患者體內,來治癒這一疾病。

全球有許多人患有心臟疾病,對此目前沒有太好的辦法,因為心臟不能自我修復。Ray的父親於60年代患上了心臟病,於1970年去世。而今天,人們可以通過提取細胞並重新編碼,讓心臟自我修復損傷。這樣的例子還有很多。通過改變基因,我們將找到一類全新的治療方式。

此外,在癌症的腫瘤中也可以看到類似的過程。癌症治療可以依靠幹細胞尋找治療方法,幹細胞有著很強的複製能力,可以通過對幹細胞進行重新編碼,阻止這樣的複製,從而抑制癌症發展。目前有數千個這樣的項目,正試圖對基因進行重新編碼,以治療許多與基因有關的疾病。

2.到2029年,人工智能將會超越人類智力,而到了2045年將達到技術奇點,科技將導致超級人工智能機器出現,人類和機器將會更進一步的整合。

對於人工智能,Stephen Hawking 和 Elon Musk 都表達過對其安全性的擔憂,認為人工智能將具有巨大的破壞性。Ray對此回應道:「他似乎是在說,未來5年內將會出現超級人工智能。不過,這很不現實。我的觀點是,人工智能達到人類的水平還需要15年時間,而這一數字可能也是錯的。」

人工智能專家普遍認為,出現超級人工智能需要花費20到30年時間。不過從人類歷史的角度來看,這並不是很長的時間。而他曾刊文指出,在成熟的時間點到來之前,人們可以通過加強人類監督和推進社會機構探討,來保證人工智能的安全性。

實際上,所有的技術都是雙刃劍,有著好壞兩面。「火可以用來取暖、燒飯,也可以燒掉你的房子。另一個例子是生物技術,雖然目前它可以用於治療基因導致的疾病,然而30年前,人們會擔心恐怖分子利用生物技術去改造常見病毒,使其成為致命的武器。」他舉例說道。他指出,在國際上有定期舉辦的生物科技會議,其目標是制定方針確保技術的安全性,預防有意或無意引發的生物技術事故。「這涉及到複雜的機制,而這種機制目前運轉良好,過去30年並未看到什麼問題。我認為,在發展人工智能的過程中,這是一個很好的模式。」

在他看來,未來,人工智能可以與人類文明進行深度整合。人工智能可以獲得人類的全部知識,人類也可以與人工智能對話。人工智能可以去做人類能做的事,具有很高的智慧水平,同時逐漸發展。人工智能將與社會深度整合,而世界將會更加和平。通過更好的通信技術,我們將可以更好地了解對方,這將大量減少世界上的暴力。因此,隨著人工智能成為人類文明的一部分,人類文明將變得更好。

3.近年來火熱的3D列印技術到2020年才會進入黃金時代,大規模取代製造業是5年後的事情。他表示,技術周期都會經歷逐漸成長、迅速爆發、跌至谷底到趨於正常的過程,而3D列印的真正成熟還需要時間。

對此,他解釋道:關於3D列印,我希望人們從中獲得經驗,並實現更好的過渡。有人認為,3D列印明年就能給全世界帶來革命,不過我認為2020年更有可能。目前3D列印已有一些有趣的應用,例如列印人體器官。不過,我們還需要使其更加完美。

4.除了3D列印,還有哪些技術正經歷類似的曲線?他說,虛擬實境是一個類似的技術,在真正取得突破之前還需要幾年時間。到2020年,人類將在完全沉浸式的虛擬環境中交互,2030年代該環境將引入觸覺。

他指出,虛擬實境在遊戲行業非常有趣,3D的虛擬實境能帶來沉浸感。不過要到2020年,人們才會經常使用這一技術,例如將其置於隱形眼鏡中,可以與在遠方的人進行看似面對面的交流,看起來真實感將很強,我們甚至可以觸摸到對方。不過從目前來看,這樣的場景要到2020年才能實現。

5.搜尋引擎在五年內將可理解自然語言。

ETF投資,美股投資,臺股ETF投資,西洋情歌,西洋音樂,閱讀與興趣 [ラブソング, песня любви][ETF inversión, ETF投資します, ETF инвестиции], all investment and interesting stuff I touched, experienced;

2015年2月17日 星期二

2015年1月7日 星期三

從機器學習到人類社會結構的革命 - 20年內巨型雲端機器人將改變人類社會 ( From machine learning to human social structures Revolution - the giant cloud robot will change human society within 20 years )

It used to be that if you wanted to get a computer to do something new, you would have to program it. Now, programming, for those of you here that haven't done it yourself, requires laying out in excruciating detail every single step that you want the computer to do in order to achieve your goal. Now, if you want to do something that you don't know how to do yourself, then this is going to be a great challenge.

So this was the challenge faced by this man, Arthur Samuel. In 1956, he wanted to get this computer to be able to beat him at checkers. How can you write a program, lay out in excruciating detail, how to be better than you at checkers? So he came up with an idea: he had the computer play against itself thousands of times and learn how to play checkers. And indeed it worked, and in fact, by 1962, this computer had beaten the Connecticut state champion.

So Arthur Samuel was the father of machine learning, and I have a great debt to him, because I am a machine learning practitioner. I was the president of Kaggle, a community of over 200,000 machine learning practictioners. Kaggle puts up competitions to try and get them to solve previously unsolved problems, and it's been successful hundreds of times. So from this vantage point, I was able to find out a lot about what machine learning can do in the past, can do today, and what it could do in the future. Perhaps the first big success of machine learning commercially was Google. Google showed that it is possible to find information by using a computer algorithm, and this algorithm is based on machine learning. Since that time, there have been many commercial successes of machine learning. Companies like Amazon and Netflix use machine learning to suggest products that you might like to buy, movies that you might like to watch. Sometimes, it's almost creepy. Companies like LinkedIn and Facebook sometimes will tell you about who your friends might be and you have no idea how it did it, and this is because it's using the power of machine learning. These are algorithms that have learned how to do this from data rather than being programmed by hand.

This is also how IBM was successful in getting Watson to beat the two world champions at "Jeopardy," answering incredibly subtle and complex questions like this one. ["The ancient 'Lion of Nimrud' went missing from this city's national museum in 2003 (along with a lot of other stuff)"] This is also why we are now able to see the first self-driving cars. If you want to be able to tell the difference between, say, a tree and a pedestrian, well, that's pretty important. We don't know how to write those programs by hand, but with machine learning, this is now possible. And in fact, this car has driven over a million miles without any accidents on regular roads.

So we now know that computers can learn, and computers can learn to do things that we actually sometimes don't know how to do ourselves, or maybe can do them better than us. One of the most amazing examples I've seen of machine learning happened on a project that I ran at Kaggle where a team run by a guy called Geoffrey Hinton from the University of Toronto won a competition for automatic drug discovery. Now, what was extraordinary here is not just that they beat all of the algorithms developed by Merck or the international academic community, but nobody on the team had any background in chemistry or biology or life sciences, and they did it in two weeks. How did they do this? They used an extraordinary algorithm called deep learning. So important was this that in fact the success was covered in The New York Times in a front page article a few weeks later. This is Geoffrey Hinton here on the left-hand side. Deep learning is an algorithm inspired by how the human brain works, and as a result it's an algorithm which has no theoretical limitations on what it can do. The more data you give it and the more computation time you give it, the better it gets.

The New York Times also showed in this article another extraordinary result of deep learning which I'm going to show you now. It shows that computers can listen and understand.

(Video) Richard Rashid: Now, the last step that I want to be able to take in this process is to actually speak to you in Chinese. Now the key thing there is, we've been able to take a large amount of information from many Chinese speakers and produce a text-to-speech system that takes Chinese text and converts it into Chinese language, and then we've taken an hour or so of my own voice and we've used that to modulate the standard text-to-speech system so that it would sound like me. Again, the result's not perfect. There are in fact quite a few errors. (In Chinese) (Applause) There's much work to be done in this area. (In Chinese)

Jeremy Howard: Well, that was at a machine learning conference in China. It's not often, actually, at academic conferences that you do hear spontaneous applause, although of course sometimes at TEDx conferences, feel free. Everything you saw there was happening with deep learning. (Applause) Thank you. The transcription in English was deep learning. The translation to Chinese and the text in the top right, deep learning, and the construction of the voice was deep learning as well.

So deep learning is this extraordinary thing. It's a single algorithm that can seem to do almost anything, and I discovered that a year earlier, it had also learned to see. In this obscure competition from Germany called the German Traffic Sign Recognition Benchmark, deep learning had learned to recognize traffic signs like this one. Not only could it recognize the traffic signs better than any other algorithm, the leaderboard actually showed it was better than people, about twice as good as people. So by 2011, we had the first example of computers that can see better than people. Since that time, a lot has happened. In 2012, Google announced that they had a deep learning algorithm watch YouTube videos and crunched the data on 16,000 computers for a month, and the computer independently learned about concepts such as people and cats just by watching the videos. This is much like the way that humans learn. Humans don't learn by being told what they see, but by learning for themselves what these things are. Also in 2012, Geoffrey Hinton, who we saw earlier, won the very popular ImageNet competition, looking to try to figure out from one and a half million images what they're pictures of. As of 2014, we're now down to a six percent error rate in image recognition. This is better than people, again.

So machines really are doing an extraordinarily good job of this, and it is now being used in industry. For example, Google announced last year that they had mapped every single location in France in two hours, and the way they did it was that they fed street view images into a deep learning algorithm to recognize and read street numbers. Imagine how long it would have taken before: dozens of people, many years. This is also happening in China. Baidu is kind of the Chinese Google, I guess, and what you see here in the top left is an example of a picture that I uploaded to Baidu's deep learning system, and underneath you can see that the system has understood what that picture is and found similar images. The similar images actually have similar backgrounds, similar directions of the faces, even some with their tongue out. This is not clearly looking at the text of a web page. All I uploaded was an image. So we now have computers which really understand what they see and can therefore search databases of hundreds of millions of images in real time.

So what does it mean now that computers can see? Well, it's not just that computers can see. In fact, deep learning has done more than that. Complex, nuanced sentences like this one are now understandable with deep learning algorithms. As you can see here, this Stanford-based system showing the red dot at the top has figured out that this sentence is expressing negative sentiment. Deep learning now in fact is near human performance at understanding what sentences are about and what it is saying about those things. Also, deep learning has been used to read Chinese, again at about native Chinese speaker level. This algorithm developed out of Switzerland by people, none of whom speak or understand any Chinese. As I say, using deep learning is about the best system in the world for this, even compared to native human understanding.

This is a system that we put together at my company which shows putting all this stuff together. These are pictures which have no text attached, and as I'm typing in here sentences, in real time it's understanding these pictures and figuring out what they're about and finding pictures that are similar to the text that I'm writing. So you can see, it's actually understanding my sentences and actually understanding these pictures. I know that you've seen something like this on Google, where you can type in things and it will show you pictures, but actually what it's doing is it's searching the webpage for the text. This is very different from actually understanding the images. This is something that computers have only been able to do for the first time in the last few months.

So we can see now that computers can not only see but they can also read, and, of course, we've shown that they can understand what they hear. Perhaps not surprising now that I'm going to tell you they can write. Here is some text that I generated using a deep learning algorithm yesterday. And here is some text that an algorithm out of Stanford generated. Each of these sentences was generated by a deep learning algorithm to describe each of those pictures. This algorithm before has never seen a man in a black shirt playing a guitar. It's seen a man before, it's seen black before, it's seen a guitar before, but it has independently generated this novel description of this picture. We're still not quite at human performance here, but we're close. In tests, humans prefer the computer-generated caption one out of four times. Now this system is now only two weeks old, so probably within the next year, the computer algorithm will be well past human performance at the rate things are going. So computers can also write.

So we put all this together and it leads to very exciting opportunities. For example, in medicine, a team in Boston announced that they had discovered dozens of new clinically relevant features of tumors which help doctors make a prognosis of a cancer. Very similarly, in Stanford, a group there announced that, looking at tissues under magnification, they've developed a machine learning-based system which in fact is better than human pathologists at predicting survival rates for cancer sufferers. In both of these cases, not only were the predictions more accurate, but they generated new insightful science. In the radiology case, they were new clinical indicators that humans can understand. In this pathology case, the computer system actually discovered that the cells around the cancer are as important as the cancer cells themselves in making a diagnosis. This is the opposite of what pathologists had been taught for decades. In each of those two cases, they were systems developed by a combination of medical experts and machine learning experts, but as of last year, we're now beyond that too. This is an example of identifying cancerous areas of human tissue under a microscope. The system being shown here can identify those areas more accurately, or about as accurately, as human pathologists, but was built entirely with deep learning using no medical expertise by people who have no background in the field. Similarly, here, this neuron segmentation. We can now segment neurons about as accurately as humans can, but this system was developed with deep learning using people with no previous background in medicine.

So myself, as somebody with no previous background in medicine, I seem to be entirely well qualified to start a new medical company, which I did. I was kind of terrified of doing it, but the theory seemed to suggest that it ought to be possible to do very useful medicine using just these data analytic techniques. And thankfully, the feedback has been fantastic, not just from the media but from the medical community, who have been very supportive. The theory is that we can take the middle part of the medical process and turn that into data analysis as much as possible, leaving doctors to do what they're best at. I want to give you an example. It now takes us about 15 minutes to generate a new medical diagnostic test and I'll show you that in real time now, but I've compressed it down to three minutes by cutting some pieces out. Rather than showing you creating a medical diagnostic test, I'm going to show you a diagnostic test of car images, because that's something we can all understand.

So here we're starting with about 1.5 million car images, and I want to create something that can split them into the angle of the photo that's being taken. So these images are entirely unlabeled, so I have to start from scratch. With our deep learning algorithm, it can automatically identify areas of structure in these images. So the nice thing is that the human and the computer can now work together. So the human, as you can see here, is telling the computer about areas of interest which it wants the computer then to try and use to improve its algorithm. Now, these deep learning systems actually are in 16,000-dimensional space, so you can see here the computer rotating this through that space, trying to find new areas of structure. And when it does so successfully, the human who is driving it can then point out the areas that are interesting. So here, the computer has successfully found areas, for example, angles. So as we go through this process, we're gradually telling the computer more and more about the kinds of structures we're looking for. You can imagine in a diagnostic test this would be a pathologist identifying areas of pathosis, for example, or a radiologist indicating potentially troublesome nodules. And sometimes it can be difficult for the algorithm. In this case, it got kind of confused. The fronts and the backs of the cars are all mixed up. So here we have to be a bit more careful, manually selecting these fronts as opposed to the backs, then telling the computer that this is a type of group that we're interested in.

So we do that for a while, we skip over a little bit, and then we train the machine learning algorithm based on these couple of hundred things, and we hope that it's gotten a lot better. You can see, it's now started to fade some of these pictures out, showing us that it already is recognizing how to understand some of these itself. We can then use this concept of similar images, and using similar images, you can now see, the computer at this point is able to entirely find just the fronts of cars. So at this point, the human can tell the computer, okay, yes, you've done a good job of that.

Sometimes, of course, even at this point it's still difficult to separate out groups. In this case, even after we let the computer try to rotate this for a while, we still find that the left sides and the right sides pictures are all mixed up together. So we can again give the computer some hints, and we say, okay, try and find a projection that separates out the left sides and the right sides as much as possible using this deep learning algorithm. And giving it that hint -- ah, okay, it's been successful. It's managed to find a way of thinking about these objects that's separated out these together.

So you get the idea here. This is a case not where the human is being replaced by a computer, but where they're working together. What we're doing here is we're replacing something that used to take a team of five or six people about seven years and replacing it with something that takes 15 minutes for one person acting alone.

So this process takes about four or five iterations. You can see we now have 62 percent of our 1.5 million images classified correctly. And at this point, we can start to quite quickly grab whole big sections, check through them to make sure that there's no mistakes. Where there are mistakes, we can let the computer know about them. And using this kind of process for each of the different groups, we are now up to an 80 percent success rate in classifying the 1.5 million images. And at this point, it's just a case of finding the small number that aren't classified correctly, and trying to understand why. And using that approach, by 15 minutes we get to 97 percent classification rates.

So this kind of technique could allow us to fix a major problem, which is that there's a lack of medical expertise in the world. The World Economic Forum says that there's between a 10x and a 20x shortage of physicians in the developing world, and it would take about 300 years to train enough people to fix that problem. So imagine if we can help enhance their efficiency using these deep learning approaches?

So I'm very excited about the opportunities. I'm also concerned about the problems. The problem here is that every area in blue on this map is somewhere where services are over 80 percent of employment. What are services? These are services. These are also the exact things that computers have just learned how to do. So 80 percent of the world's employment in the developed world is stuff that computers have just learned how to do. What does that mean? Well, it'll be fine. They'll be replaced by other jobs. For example, there will be more jobs for data scientists. Well, not really. It doesn't take data scientists very long to build these things. For example, these four algorithms were all built by the same guy. So if you think, oh, it's all happened before, we've seen the results in the past of when new things come along and they get replaced by new jobs, what are these new jobs going to be? It's very hard for us to estimate this, because human performance grows at this gradual rate, but we now have a system, deep learning, that we know actually grows in capability exponentially. And we're here. So currently, we see the things around us and we say, "Oh, computers are still pretty dumb." Right? But in five years' time, computers will be off this chart. So we need to be starting to think about this capability right now.

We have seen this once before, of course. In the Industrial Revolution, we saw a step change in capability thanks to engines. The thing is, though, that after a while, things flattened out. There was social disruption, but once engines were used to generate power in all the situations, things really settled down. The Machine Learning Revolution is going to be very different from the Industrial Revolution, because the Machine Learning Revolution, it never settles down. The better computers get at intellectual activities, the more they can build better computers to be better at intellectual capabilities, so this is going to be a kind of change that the world has actually never experienced before, so your previous understanding of what's possible is different.

This is already impacting us. In the last 25 years, as capital productivity has increased, labor productivity has been flat, in fact even a little bit down.

So I want us to start having this discussion now. I know that when I often tell people about this situation, people can be quite dismissive. Well, computers can't really think, they don't emote, they don't understand poetry, we don't really understand how they work. So what? Computers right now can do the things that humans spend most of their time being paid to do, so now's the time to start thinking about how we're going to adjust our social structures and economic structures to be aware of this new reality. Thank you!

So this was the challenge faced by this man, Arthur Samuel. In 1956, he wanted to get this computer to be able to beat him at checkers. How can you write a program, lay out in excruciating detail, how to be better than you at checkers? So he came up with an idea: he had the computer play against itself thousands of times and learn how to play checkers. And indeed it worked, and in fact, by 1962, this computer had beaten the Connecticut state champion.

So Arthur Samuel was the father of machine learning, and I have a great debt to him, because I am a machine learning practitioner. I was the president of Kaggle, a community of over 200,000 machine learning practictioners. Kaggle puts up competitions to try and get them to solve previously unsolved problems, and it's been successful hundreds of times. So from this vantage point, I was able to find out a lot about what machine learning can do in the past, can do today, and what it could do in the future. Perhaps the first big success of machine learning commercially was Google. Google showed that it is possible to find information by using a computer algorithm, and this algorithm is based on machine learning. Since that time, there have been many commercial successes of machine learning. Companies like Amazon and Netflix use machine learning to suggest products that you might like to buy, movies that you might like to watch. Sometimes, it's almost creepy. Companies like LinkedIn and Facebook sometimes will tell you about who your friends might be and you have no idea how it did it, and this is because it's using the power of machine learning. These are algorithms that have learned how to do this from data rather than being programmed by hand.

This is also how IBM was successful in getting Watson to beat the two world champions at "Jeopardy," answering incredibly subtle and complex questions like this one. ["The ancient 'Lion of Nimrud' went missing from this city's national museum in 2003 (along with a lot of other stuff)"] This is also why we are now able to see the first self-driving cars. If you want to be able to tell the difference between, say, a tree and a pedestrian, well, that's pretty important. We don't know how to write those programs by hand, but with machine learning, this is now possible. And in fact, this car has driven over a million miles without any accidents on regular roads.

So we now know that computers can learn, and computers can learn to do things that we actually sometimes don't know how to do ourselves, or maybe can do them better than us. One of the most amazing examples I've seen of machine learning happened on a project that I ran at Kaggle where a team run by a guy called Geoffrey Hinton from the University of Toronto won a competition for automatic drug discovery. Now, what was extraordinary here is not just that they beat all of the algorithms developed by Merck or the international academic community, but nobody on the team had any background in chemistry or biology or life sciences, and they did it in two weeks. How did they do this? They used an extraordinary algorithm called deep learning. So important was this that in fact the success was covered in The New York Times in a front page article a few weeks later. This is Geoffrey Hinton here on the left-hand side. Deep learning is an algorithm inspired by how the human brain works, and as a result it's an algorithm which has no theoretical limitations on what it can do. The more data you give it and the more computation time you give it, the better it gets.

The New York Times also showed in this article another extraordinary result of deep learning which I'm going to show you now. It shows that computers can listen and understand.

(Video) Richard Rashid: Now, the last step that I want to be able to take in this process is to actually speak to you in Chinese. Now the key thing there is, we've been able to take a large amount of information from many Chinese speakers and produce a text-to-speech system that takes Chinese text and converts it into Chinese language, and then we've taken an hour or so of my own voice and we've used that to modulate the standard text-to-speech system so that it would sound like me. Again, the result's not perfect. There are in fact quite a few errors. (In Chinese) (Applause) There's much work to be done in this area. (In Chinese)

Jeremy Howard: Well, that was at a machine learning conference in China. It's not often, actually, at academic conferences that you do hear spontaneous applause, although of course sometimes at TEDx conferences, feel free. Everything you saw there was happening with deep learning. (Applause) Thank you. The transcription in English was deep learning. The translation to Chinese and the text in the top right, deep learning, and the construction of the voice was deep learning as well.

So deep learning is this extraordinary thing. It's a single algorithm that can seem to do almost anything, and I discovered that a year earlier, it had also learned to see. In this obscure competition from Germany called the German Traffic Sign Recognition Benchmark, deep learning had learned to recognize traffic signs like this one. Not only could it recognize the traffic signs better than any other algorithm, the leaderboard actually showed it was better than people, about twice as good as people. So by 2011, we had the first example of computers that can see better than people. Since that time, a lot has happened. In 2012, Google announced that they had a deep learning algorithm watch YouTube videos and crunched the data on 16,000 computers for a month, and the computer independently learned about concepts such as people and cats just by watching the videos. This is much like the way that humans learn. Humans don't learn by being told what they see, but by learning for themselves what these things are. Also in 2012, Geoffrey Hinton, who we saw earlier, won the very popular ImageNet competition, looking to try to figure out from one and a half million images what they're pictures of. As of 2014, we're now down to a six percent error rate in image recognition. This is better than people, again.

So machines really are doing an extraordinarily good job of this, and it is now being used in industry. For example, Google announced last year that they had mapped every single location in France in two hours, and the way they did it was that they fed street view images into a deep learning algorithm to recognize and read street numbers. Imagine how long it would have taken before: dozens of people, many years. This is also happening in China. Baidu is kind of the Chinese Google, I guess, and what you see here in the top left is an example of a picture that I uploaded to Baidu's deep learning system, and underneath you can see that the system has understood what that picture is and found similar images. The similar images actually have similar backgrounds, similar directions of the faces, even some with their tongue out. This is not clearly looking at the text of a web page. All I uploaded was an image. So we now have computers which really understand what they see and can therefore search databases of hundreds of millions of images in real time.

So what does it mean now that computers can see? Well, it's not just that computers can see. In fact, deep learning has done more than that. Complex, nuanced sentences like this one are now understandable with deep learning algorithms. As you can see here, this Stanford-based system showing the red dot at the top has figured out that this sentence is expressing negative sentiment. Deep learning now in fact is near human performance at understanding what sentences are about and what it is saying about those things. Also, deep learning has been used to read Chinese, again at about native Chinese speaker level. This algorithm developed out of Switzerland by people, none of whom speak or understand any Chinese. As I say, using deep learning is about the best system in the world for this, even compared to native human understanding.

This is a system that we put together at my company which shows putting all this stuff together. These are pictures which have no text attached, and as I'm typing in here sentences, in real time it's understanding these pictures and figuring out what they're about and finding pictures that are similar to the text that I'm writing. So you can see, it's actually understanding my sentences and actually understanding these pictures. I know that you've seen something like this on Google, where you can type in things and it will show you pictures, but actually what it's doing is it's searching the webpage for the text. This is very different from actually understanding the images. This is something that computers have only been able to do for the first time in the last few months.

So we can see now that computers can not only see but they can also read, and, of course, we've shown that they can understand what they hear. Perhaps not surprising now that I'm going to tell you they can write. Here is some text that I generated using a deep learning algorithm yesterday. And here is some text that an algorithm out of Stanford generated. Each of these sentences was generated by a deep learning algorithm to describe each of those pictures. This algorithm before has never seen a man in a black shirt playing a guitar. It's seen a man before, it's seen black before, it's seen a guitar before, but it has independently generated this novel description of this picture. We're still not quite at human performance here, but we're close. In tests, humans prefer the computer-generated caption one out of four times. Now this system is now only two weeks old, so probably within the next year, the computer algorithm will be well past human performance at the rate things are going. So computers can also write.

So we put all this together and it leads to very exciting opportunities. For example, in medicine, a team in Boston announced that they had discovered dozens of new clinically relevant features of tumors which help doctors make a prognosis of a cancer. Very similarly, in Stanford, a group there announced that, looking at tissues under magnification, they've developed a machine learning-based system which in fact is better than human pathologists at predicting survival rates for cancer sufferers. In both of these cases, not only were the predictions more accurate, but they generated new insightful science. In the radiology case, they were new clinical indicators that humans can understand. In this pathology case, the computer system actually discovered that the cells around the cancer are as important as the cancer cells themselves in making a diagnosis. This is the opposite of what pathologists had been taught for decades. In each of those two cases, they were systems developed by a combination of medical experts and machine learning experts, but as of last year, we're now beyond that too. This is an example of identifying cancerous areas of human tissue under a microscope. The system being shown here can identify those areas more accurately, or about as accurately, as human pathologists, but was built entirely with deep learning using no medical expertise by people who have no background in the field. Similarly, here, this neuron segmentation. We can now segment neurons about as accurately as humans can, but this system was developed with deep learning using people with no previous background in medicine.

So myself, as somebody with no previous background in medicine, I seem to be entirely well qualified to start a new medical company, which I did. I was kind of terrified of doing it, but the theory seemed to suggest that it ought to be possible to do very useful medicine using just these data analytic techniques. And thankfully, the feedback has been fantastic, not just from the media but from the medical community, who have been very supportive. The theory is that we can take the middle part of the medical process and turn that into data analysis as much as possible, leaving doctors to do what they're best at. I want to give you an example. It now takes us about 15 minutes to generate a new medical diagnostic test and I'll show you that in real time now, but I've compressed it down to three minutes by cutting some pieces out. Rather than showing you creating a medical diagnostic test, I'm going to show you a diagnostic test of car images, because that's something we can all understand.

So here we're starting with about 1.5 million car images, and I want to create something that can split them into the angle of the photo that's being taken. So these images are entirely unlabeled, so I have to start from scratch. With our deep learning algorithm, it can automatically identify areas of structure in these images. So the nice thing is that the human and the computer can now work together. So the human, as you can see here, is telling the computer about areas of interest which it wants the computer then to try and use to improve its algorithm. Now, these deep learning systems actually are in 16,000-dimensional space, so you can see here the computer rotating this through that space, trying to find new areas of structure. And when it does so successfully, the human who is driving it can then point out the areas that are interesting. So here, the computer has successfully found areas, for example, angles. So as we go through this process, we're gradually telling the computer more and more about the kinds of structures we're looking for. You can imagine in a diagnostic test this would be a pathologist identifying areas of pathosis, for example, or a radiologist indicating potentially troublesome nodules. And sometimes it can be difficult for the algorithm. In this case, it got kind of confused. The fronts and the backs of the cars are all mixed up. So here we have to be a bit more careful, manually selecting these fronts as opposed to the backs, then telling the computer that this is a type of group that we're interested in.

So we do that for a while, we skip over a little bit, and then we train the machine learning algorithm based on these couple of hundred things, and we hope that it's gotten a lot better. You can see, it's now started to fade some of these pictures out, showing us that it already is recognizing how to understand some of these itself. We can then use this concept of similar images, and using similar images, you can now see, the computer at this point is able to entirely find just the fronts of cars. So at this point, the human can tell the computer, okay, yes, you've done a good job of that.

Sometimes, of course, even at this point it's still difficult to separate out groups. In this case, even after we let the computer try to rotate this for a while, we still find that the left sides and the right sides pictures are all mixed up together. So we can again give the computer some hints, and we say, okay, try and find a projection that separates out the left sides and the right sides as much as possible using this deep learning algorithm. And giving it that hint -- ah, okay, it's been successful. It's managed to find a way of thinking about these objects that's separated out these together.

So you get the idea here. This is a case not where the human is being replaced by a computer, but where they're working together. What we're doing here is we're replacing something that used to take a team of five or six people about seven years and replacing it with something that takes 15 minutes for one person acting alone.

So this process takes about four or five iterations. You can see we now have 62 percent of our 1.5 million images classified correctly. And at this point, we can start to quite quickly grab whole big sections, check through them to make sure that there's no mistakes. Where there are mistakes, we can let the computer know about them. And using this kind of process for each of the different groups, we are now up to an 80 percent success rate in classifying the 1.5 million images. And at this point, it's just a case of finding the small number that aren't classified correctly, and trying to understand why. And using that approach, by 15 minutes we get to 97 percent classification rates.

So this kind of technique could allow us to fix a major problem, which is that there's a lack of medical expertise in the world. The World Economic Forum says that there's between a 10x and a 20x shortage of physicians in the developing world, and it would take about 300 years to train enough people to fix that problem. So imagine if we can help enhance their efficiency using these deep learning approaches?

So I'm very excited about the opportunities. I'm also concerned about the problems. The problem here is that every area in blue on this map is somewhere where services are over 80 percent of employment. What are services? These are services. These are also the exact things that computers have just learned how to do. So 80 percent of the world's employment in the developed world is stuff that computers have just learned how to do. What does that mean? Well, it'll be fine. They'll be replaced by other jobs. For example, there will be more jobs for data scientists. Well, not really. It doesn't take data scientists very long to build these things. For example, these four algorithms were all built by the same guy. So if you think, oh, it's all happened before, we've seen the results in the past of when new things come along and they get replaced by new jobs, what are these new jobs going to be? It's very hard for us to estimate this, because human performance grows at this gradual rate, but we now have a system, deep learning, that we know actually grows in capability exponentially. And we're here. So currently, we see the things around us and we say, "Oh, computers are still pretty dumb." Right? But in five years' time, computers will be off this chart. So we need to be starting to think about this capability right now.

We have seen this once before, of course. In the Industrial Revolution, we saw a step change in capability thanks to engines. The thing is, though, that after a while, things flattened out. There was social disruption, but once engines were used to generate power in all the situations, things really settled down. The Machine Learning Revolution is going to be very different from the Industrial Revolution, because the Machine Learning Revolution, it never settles down. The better computers get at intellectual activities, the more they can build better computers to be better at intellectual capabilities, so this is going to be a kind of change that the world has actually never experienced before, so your previous understanding of what's possible is different.

This is already impacting us. In the last 25 years, as capital productivity has increased, labor productivity has been flat, in fact even a little bit down.

So I want us to start having this discussion now. I know that when I often tell people about this situation, people can be quite dismissive. Well, computers can't really think, they don't emote, they don't understand poetry, we don't really understand how they work. So what? Computers right now can do the things that humans spend most of their time being paid to do, so now's the time to start thinking about how we're going to adjust our social structures and economic structures to be aware of this new reality. Thank you!

Andrew McAfee: 未來的工作形態至機器人的世界 ( What will future jobs look like when the robot become more intelligent? )

自從機器可以聽、說、了解人類基本語言,機器自動將占據人類許多工作,人類社會將啟動另一波革命,人類將只剩創造、律法、設計、企劃等高階工作,連整個教育工作都將改變,學生大幅知識不再依賴老師取而代之是網路的教學機器透過 Apps 讓孩子、學生與機器人玩中間學習,老師將被有些學生認為該淘汰的東西,機器人及機器不只是搶走許多工作,運用機器人及機器自動化國家及公司也將大幅超越沒有這些能力的,這些沒能力的國家也無能力抵抗有這些機器人自動化國家之經濟、政治力控制。

Andrew McAfee: 機器人搶了我們的工作嗎?

當數以千萬計的勞工 處於失業或是低度就業的狀況發生時 就會有不少人會對科技如何影響勞工這個議題有興趣 而當我開始檢視這個議題, 赫然發現 大家關切的主題是正確的 但又同時全然的地忽視了關鍵要點。 在這個主題上所提出的問題, 是關於 這些數位科技是否影響了人們謀生的能力? 或者, 換個說法就是 機器人是否正在搶走人類的工作機會? 有一些證據顯示的確如此

大蕭條(2008~2012)結束時, 美國的 GDP 恢復了 緩慢步調的上昇, 其他的一些 經濟指標也開始反彈,看起來 比較健康也比較迅速了。企業的獲利 是相當高的。事實上,如果把銀行業也包含進來 這些數值比以往任何時候都來得高。 企業在工具與設備的投資 還有硬體和軟體方面, 都處於歷史新高。 所以企業都在拿出支票本花錢投資 但是他們並沒有真正的擴大招募員工 這條紅線是就業人口的比率, 換句話說,就是處於就業年齡的美國人 真的有工作的比例 我們可以看到這個比例在大蕭條時萎靡 但是到現在都還沒有開始反彈回來 ... 所以,是啊,機器人正在搶走我們的工作, 但若只著重這件事情, 就會漏掉了整件事情的重點了 真正的重點是, 人類可以被解放出來, 做其他的事情 而我們可以做的事情, 我非常確定的說 我們會去做的是減少貧困和苦差事 減少世界各地的苦難。我很有信心 我們會學習如何在這個星球上更輕鬆的過活 我也非常的確信, 我們將會運用 我們的全新的數位化工具, 非常深切的 並且非常良善的用它, 讓先前發生過的每個改變 相較之下都變得微不足道了。 我最後有一句話, 要留給一個人 這個人在數位時代的演進, 是先驅者的地位 就是我們的老朋友, 肯恩 詹寧斯, 我同意他的看法 我打算這樣回應他的話: "我,代表我自己,歡迎我們的新電腦領主"。 非常感謝。

Related articles

INTEL 高價、高功耗能敵 APPLE A-n 新戰略嗎?

INTEL 高價、高功耗能敵 APPLE A-n 新戰略嗎? Andrew McAfee: 未來的工作形態至機器人的世界 ( What will future jobs look like when the robot become more intelligent? )

Andrew McAfee: 未來的工作形態至機器人的世界 ( What will future jobs look like when the robot become more intelligent? ) Lorenza Ponce 及 Bon Jovi 照片 - You Want To Make A Memory

Lorenza Ponce 及 Bon Jovi 照片 - You Want To Make A Memory Everyone needs love ( 愛是永遠的追求 )

Everyone needs love ( 愛是永遠的追求 ) 網際網路上有多少種是伺服器? ( How many kind of servers located in internet ? )

網際網路上有多少種是伺服器? ( How many kind of servers located in internet ? ) STM32F4 Discovery board Demo. code ( STM32F407 discovery 實驗板 demo. code 研究 )

STM32F4 Discovery board Demo. code ( STM32F407 discovery 實驗板 demo. code 研究 ) Eva Herzigova 照片及 Jessica Andrews - you will never be forgotten lyrics 歌詞

Eva Herzigova 照片及 Jessica Andrews - you will never be forgotten lyrics 歌詞 The most beautiful bride with Michael Learn To Rock songs ( 最美的新娘 - 愛是永遠的追求 )

The most beautiful bride with Michael Learn To Rock songs ( 最美的新娘 - 愛是永遠的追求 ) New York Times copycat journalism: Tex Hall and oil corruption

New York Times copycat journalism: Tex Hall and oil corruption 讀巴菲特自傳 學態度和口語詞彙

讀巴菲特自傳 學態度和口語詞彙

2014年5月12日 星期一

從 Jinha Lee 及 Jeff Han 在人機介面創新給我們的啟示 - 平板電腦、穿載電腦在人機介面仍需要更多創新 ( From Jinha Lee And Jeff Han Innovation, Inspire Us Human interface Still Have Innovation Space For Future Development )

李鎮河:用手進到電腦中擷取畫素 (Jinha Lee: Reach into the computer and grab a pixel)

在整個電腦的發展歷程中 我們不斷地嘗試縮短 我們與數位資訊之間的距離 也就是實體世界

與螢幕中虛擬世界的距離 在虛擬世界裡 我們總是可以盡情發揮想像力 這兩者之間的距離也確實縮短了 比較近 還更再更近 現在這個距離已經縮短到 不到一毫米了 也就是觸控式螢幕厚度的距離 任何人 都可以使用電腦

但我在想 有沒有可能 我們跟電腦之間變成完全零距離 我開始想像那會是什麼樣子 首先 我做出了這個工具 它可以穿入到數位空間裡 所以當你用力地壓螢幕時 這個工具可以把你的身體 轉換成為螢幕上的像素 做設計的人可以 把他們的想法直接3D實體化 外科醫生也可以在 螢幕中的虛擬器官上做練習 我們跟電腦之間的距離 便隨著這個工具的發明而被打破了

即使如此 我們的手還是停留在螢幕外 有沒有可能直接把手伸進電腦 透過我們靈巧的雙手 直接使用這些數位資訊呢? 我在微軟的應用科學部門裡 和著我的指導教授 Cati Boulanger 重新設計出這台電腦 把鍵盤上面這個小小的空間 轉變成為一個數位化工作區 是透過一個透明顯示器 跟3D距離相機的組合 去感應你的手指跟臉 於是你就可以將你的手 從鍵盤上抬起來 並且就此進入3D的空間 並且可以直接用你的手 來抓住像素

因為視窗跟檔案 都在真實地存在這個空間裡 選取它們就好像 從書架上拿一本書一樣容易 你也可以這樣翻閱這本書 當要在某句話 或某些字上畫重點的時候 就螢幕下方的觸控板上劃過去即可 建築師可以直接用他們的雙手 來伸展或把模型轉過來 所以在這些例子裡面 我們是真的進入了數位的世界

還有沒有可能是相反過來 讓數位資訊直接跑到我們面前呢 我相信我們當中許多人 都有過在網上購物或退貨的經驗 現在你不需要再擔心了 你所看到的是一個網路實境試衣間 當系統辨識出你的身型之後 這個影像就會 透過頭戴式或透明顯示器穿戴在你身上

延伸這個想法,我開始想 有沒有可能不光是 在三度空間裡用肉眼看到像素 而是讓像素具體化 讓我們可以摸得到 跟感覺得到它呢 這樣的未來會是什麼樣子? 在麻省理工學院的媒體實驗室裡 我跟我的指導教授 Hiroshi Ishii 以及我的合作夥伴 Rehmi Post 一起研發出這一個具像化的畫素 這組模組中的球狀磁鐵 就好像是現實生活中的3D像素 也就是說無論是 使用電腦或人用手 都可以在這個小小的三度空間裡 自由且同步地移動它 基本上我們所做的 就是拿掉重力這個因素 透過結合磁浮 及力學效應 再加上感應技術 來讓它動起來 並透過程式來將物件數位化 就讓它脫離了時間空間的限制 也就是說現在 人的動作可以被紀錄並重新播放 並且在現實生活中被永久保存下來 所以現在芭蕾舞 也可以進行遠距教學 麥可喬丹的傳奇飛人之姿 也可以真實地一再重現 學生也可以使用它 來學習像行星運動或物理學 這類比較複雜的概念 相較於一般的電腦螢幕或教科書 這是個有形有體的真實經驗 你可以觸摸跟實際感受 這會令人印象深刻 更令人興奮的是 不是只是將電腦裡的東西實體化 而是當我們生活週遭 很多東西開始被程式化之後 我們的日常生活也將會隨之改變

就如各位所看到的 數位資訊將不只提供知識或想法 而是直接在我們面前活生生地呈現出來 就好像是我們生活週遭的一部份 我們不再需要將自己 從這個世界中抽離出來

我們今天是從這層隔閡開始講起 但如果這層隔閡不再復存 那唯一會限制我們的 就只剩下自己的想像力了

謝謝

Jeff Han: The radical promise of the multi-touch interface

我真的,真的非常興奮,今天能夠在這邊, 因為,我將要向你們展示一些,剛剛完成研發的技術, 真的,而且我非常高興,你們能夠成為世界上,親眼目睹這個技術,最早的一群人之一, 因為我真的,真的認為這將會改變, 真真正正的改變,從今以後我們與機器互動的方式。

現在,這邊有一個背投影式繪圖桌。它大概有 36 吋寬, 而且它配備有多點觸控感應器。平日所見的一般觸控感應器, 像是自動櫃台,或是互動式白板, 在同一時間,只能辨識一個接觸點。 而這東西,能允許你同時間,具有多點控制。 這些觸控點,可以是從我的雙手而來,我可以單手使用多指, 或者,如果我想要,我可以一次使用全部十根手指。 你知道的,就像這樣。

雖然多點觸控不是全然嶄新的概念。 我是說,像是著名的 Bill Buxton 早在 80 年代就已經開始嘗試這個概念。 然而,我在這裡所建造的這個方法,是具有高解析度、 低價,並且也許最重要的是,其硬體可以非常簡單的調整尺寸。 因此,這個技術,如我所言,並不是現在你所見到,最令人興奮的東西, 可能除了它與眾不同的低價位以外。 這邊真正令人感興趣的是,你能夠用它來做什麼事? 以及你將能夠利用它,所創造出來的介面。現在讓我們來看看。

舉例來說,這邊我們有一個岩漿燈軟體。你可以看到, 我可以使用我的雙手,將這些斑點經由擠壓,而使它們變成一團。 我可以像這樣將系統加熱, 或者我可以用我的兩跟手指,將它們分開。 這是完全直覺性的操作,你將不會需要使用手冊。 操控介面似乎就這樣憑空消失了。 這一開始,是我們實驗室的一個博士班學生,所創造的一個類似螢幕保護程式的軟體 他的名字叫做 Ilya Rosenberg. 但是我認為,這個軟體真正的價值,在這邊顯現了。

多點觸控感應器厲害的地方在這邊,像這樣, 我可以使用許多手指來操控這個, 但是當然,多點觸控本質上也意味著多使用者。 所以克里斯 (Chris) 可以到台上來,與岩漿的另一部份互動, 在此同時,我在這邊玩弄這一部份。你可以將之想像為一種新的雕塑工具, 在這邊我將部份加熱,增加它的可塑性, 然後讓它冷卻、硬化到某個程度。 谷歌 (Google) 在他們大廳應該要有個像這樣的東西。(笑聲)

我將要向你們展示某樣東西,一個比較實際的應用範例,等它載入完成。 這是一個攝影師的燈箱工具應用程式。 再一次地,我可以使用我的兩隻手,來與這些照片互動並移動它們。 但是更酷的是,如果我用兩跟手指, 事實上我可以抓取一張照片,並且非常簡單的將之放大,就像這樣。 我可以任意地拖移、縮放並旋轉這張照片。 我可以使用我的雙手掌來這樣做, 或者,我可以只用我任何一隻手的兩隻手指一起來完成。 如果我抓住整個畫板,我可以做同樣的事,將之放大。 我可以同時操作,現在我一邊抓住這個不放, 然後握住另外一張,把它像這樣放大。

同樣的,在這裡我們也看不到操控介面。 不需要說明書。它的操作結果完全如你所預期, 特別是如果你以前沒有接觸過電腦的話。 現在,如果你想創造一個事業,像是一百美元的筆電, 其實,我對於我們將要向一個全新世代的族群介紹 傳統電腦的視窗滑鼠點擊介面這個想法,有所保留。 多點觸控才是我認為從今爾後,我們所應該與機器互動的真正方法。(掌聲) 多點觸控才是我認為從今爾後,我們所應該與機器互動的真正方法。(掌聲) 當然,我可以在這邊叫出鍵盤。 我可以將我打的字帶出來,將之放在這邊。 很明顯的,這與一般標準鍵盤並無不同, 但是當然,我可以重新縮放這個鍵盤的大小,讓它適合我的雙手使用。 這是非常重要的,因為在今日的科技下,沒有理由 我們應該去適應物理性的裝置。 那將會導致不好的後果,例如:重複性勞損。 今日我們有許多這麼好的科技, 這些操控介面應該開始來適應我們。 直到今日,真正改善我們與介面互動的應用還太少。 直到今日,真正改善我們與介面互動的應用還太少。 這個虛擬鍵盤,事實上可能是一個錯誤的發展方向。 你可以想像,在未來,當我們開始發展這種技術之後, 一個鍵盤,當你移開手的時候,也會自動漂移開來, 而且會非常聰明地預測,你將要用你的手打擊那一顆按鍵。 因此~ 再問一次,很棒吧?

聽眾:你的實驗室在哪裡?

韓傑夫:我是紐約市紐約大學的研究科學家。

這是另一種應用程式的範例。我可以創造出這些小毛球。 它會記憶我所做過的點擊。當然,我可以使用兩手操作。 你會注意到,它具有壓感功能。 但是,真正棒的在這邊,我已經向你們示範過兩指操控手勢, 它能夠讓你很快的放大。因為你不需要事先切換到手掌工具 或者放大鏡工具; 你可以連續地在多個不同的比例上,即時的創造東西,一次完成。 我可以在這邊建造大東西,我也可以回去,非常迅速的回去 回到我一開始的地方,然後甚至在這邊建造更小的東西。

這將會變得非常重要,當我們一旦開始從事像是 資料視覺化的工作。舉例來說,我想我們都非常喜歡 Hans Rosling 的演說, 而且他真正的,著重在強調一個我也已經思考許久的事實, 我們都擁有這些了不起的資料,但是,基於某些原因,它們只是被擺在那邊。 我們並沒有真正的去使用它。我認為其中一個原因就是, 藉由圖像、視覺化和參考工具,可以幫助我們處理這些資料。 但是,很大的一部分,我也著重在開始能夠創建更好的使用者介面, 能夠鑽研深入像這樣的資料,但同時仍能保持對整體的宏觀性。

現在,讓我向你們展示另一個應用程式。這個程式叫做「世界風」。 是太空總署 (NASA) 所研發的。它就像我們都見過的谷歌地球 (Google Earth); 這就好像是它的開源碼版本。有附加檔能夠載入 太空總署經年蒐集的各種資料集。 但是,如你所見,我可以使用同樣的兩指手勢 非常迅速的下潛、進入地球。又一次的,我們看不到操控介面的存在。 這真的能讓所有人,感覺非常地融入那個環境,而且操作就如同你所預期一般, 你能體會嗎?再一次,在這邊你看不到操作介面。介面就這樣消失了。 我可以切換到不同的資料瀏覽。這就是這個應用程式厲害的地方。 就像這樣。太空總署非常的酷。它們有這些超光譜影像 這些影像是人工成色的,對於決定植物的繁茂程度非常有幫助。讓我們回到剛剛那裡。

地圖軟體的偉大之處在於, 它不只是 2D 平面,它也可以是 3D 立體影像。所以再一次,應用多點觸控介面, 你可以使用像這樣的手勢,所以你可以使畫面像這樣傾斜, 你知道。不只是受限於簡單的 2D 平面攀移與移動。 我們已經研發出了這些手勢,像這樣,只要放入你的兩跟手指, 它界定了傾斜的軸線,如此這般我就可以向上或向下任意傾斜。 這是我們在這邊,剛剛想出來的點子, 你知道嗎?也許這不是這樣做最好的方法, 但是使用這種介面,你可以做許多很有趣的事情。 就算你什麼都不做,只是玩玩,也會感覺愉快。(笑聲)

所以,最後一件我想要向你們展示的東西是, 你知道,我確信我們都可以想到很多,你可以利用這個東西所做的 娛樂方面的應用。 我對於我們可以利用這東西,所做的創意性應用更感興趣。 現在,這邊有一個簡單的程式,我可以畫曲線。 當我將曲線封閉起來的時候,它就變成一個人偶。 但是有趣的事情是,我可以增加控制點。 然後我可以用我雙手的手指同時操控它們。 你會注意到它是怎麼做的。 這就像是操控木偶一般,這邊我可以使用 我的十跟手指去畫出並做出玩偶。

事實上,在這表象之下需要很多數學運算, 然後它才能控制這些圖案,並正確的反應。 我的意思是,在這邊這個能夠操控圖案的技術, 並使用多個操控點,事實上是屬於一種尖端科技。 這個技術去年才在計算機圖形學會議上公開, 但這是我真正喜好研究領域的良好範例。 所有這些需要使事情做「對」,背後的電腦運算。 直覺性的事情。做如你所預期一模一樣的事。

多點觸控互動研究,現在在人機介面領域非常地活躍。 我不是唯一一個在做這方面研究的,還有很多其他的人也在這領域。 而這種技術,將會讓更多人加入這個領域的研究, 我真的非常期待,跟在場各位接下來幾天的互動 看看這技術,將能如何應用在你們所處的領域。 謝謝大家。

在整個電腦的發展歷程中 我們不斷地嘗試縮短 我們與數位資訊之間的距離 也就是實體世界

與螢幕中虛擬世界的距離 在虛擬世界裡 我們總是可以盡情發揮想像力 這兩者之間的距離也確實縮短了 比較近 還更再更近 現在這個距離已經縮短到 不到一毫米了 也就是觸控式螢幕厚度的距離 任何人 都可以使用電腦

但我在想 有沒有可能 我們跟電腦之間變成完全零距離 我開始想像那會是什麼樣子 首先 我做出了這個工具 它可以穿入到數位空間裡 所以當你用力地壓螢幕時 這個工具可以把你的身體 轉換成為螢幕上的像素 做設計的人可以 把他們的想法直接3D實體化 外科醫生也可以在 螢幕中的虛擬器官上做練習 我們跟電腦之間的距離 便隨著這個工具的發明而被打破了

即使如此 我們的手還是停留在螢幕外 有沒有可能直接把手伸進電腦 透過我們靈巧的雙手 直接使用這些數位資訊呢? 我在微軟的應用科學部門裡 和著我的指導教授 Cati Boulanger 重新設計出這台電腦 把鍵盤上面這個小小的空間 轉變成為一個數位化工作區 是透過一個透明顯示器 跟3D距離相機的組合 去感應你的手指跟臉 於是你就可以將你的手 從鍵盤上抬起來 並且就此進入3D的空間 並且可以直接用你的手 來抓住像素

因為視窗跟檔案 都在真實地存在這個空間裡 選取它們就好像 從書架上拿一本書一樣容易 你也可以這樣翻閱這本書 當要在某句話 或某些字上畫重點的時候 就螢幕下方的觸控板上劃過去即可 建築師可以直接用他們的雙手 來伸展或把模型轉過來 所以在這些例子裡面 我們是真的進入了數位的世界

還有沒有可能是相反過來 讓數位資訊直接跑到我們面前呢 我相信我們當中許多人 都有過在網上購物或退貨的經驗 現在你不需要再擔心了 你所看到的是一個網路實境試衣間 當系統辨識出你的身型之後 這個影像就會 透過頭戴式或透明顯示器穿戴在你身上

延伸這個想法,我開始想 有沒有可能不光是 在三度空間裡用肉眼看到像素 而是讓像素具體化 讓我們可以摸得到 跟感覺得到它呢 這樣的未來會是什麼樣子? 在麻省理工學院的媒體實驗室裡 我跟我的指導教授 Hiroshi Ishii 以及我的合作夥伴 Rehmi Post 一起研發出這一個具像化的畫素 這組模組中的球狀磁鐵 就好像是現實生活中的3D像素 也就是說無論是 使用電腦或人用手 都可以在這個小小的三度空間裡 自由且同步地移動它 基本上我們所做的 就是拿掉重力這個因素 透過結合磁浮 及力學效應 再加上感應技術 來讓它動起來 並透過程式來將物件數位化 就讓它脫離了時間空間的限制 也就是說現在 人的動作可以被紀錄並重新播放 並且在現實生活中被永久保存下來 所以現在芭蕾舞 也可以進行遠距教學 麥可喬丹的傳奇飛人之姿 也可以真實地一再重現 學生也可以使用它 來學習像行星運動或物理學 這類比較複雜的概念 相較於一般的電腦螢幕或教科書 這是個有形有體的真實經驗 你可以觸摸跟實際感受 這會令人印象深刻 更令人興奮的是 不是只是將電腦裡的東西實體化 而是當我們生活週遭 很多東西開始被程式化之後 我們的日常生活也將會隨之改變

就如各位所看到的 數位資訊將不只提供知識或想法 而是直接在我們面前活生生地呈現出來 就好像是我們生活週遭的一部份 我們不再需要將自己 從這個世界中抽離出來

我們今天是從這層隔閡開始講起 但如果這層隔閡不再復存 那唯一會限制我們的 就只剩下自己的想像力了

謝謝

Jeff Han: The radical promise of the multi-touch interface

我真的,真的非常興奮,今天能夠在這邊, 因為,我將要向你們展示一些,剛剛完成研發的技術, 真的,而且我非常高興,你們能夠成為世界上,親眼目睹這個技術,最早的一群人之一, 因為我真的,真的認為這將會改變, 真真正正的改變,從今以後我們與機器互動的方式。

現在,這邊有一個背投影式繪圖桌。它大概有 36 吋寬, 而且它配備有多點觸控感應器。平日所見的一般觸控感應器, 像是自動櫃台,或是互動式白板, 在同一時間,只能辨識一個接觸點。 而這東西,能允許你同時間,具有多點控制。 這些觸控點,可以是從我的雙手而來,我可以單手使用多指, 或者,如果我想要,我可以一次使用全部十根手指。 你知道的,就像這樣。

雖然多點觸控不是全然嶄新的概念。 我是說,像是著名的 Bill Buxton 早在 80 年代就已經開始嘗試這個概念。 然而,我在這裡所建造的這個方法,是具有高解析度、 低價,並且也許最重要的是,其硬體可以非常簡單的調整尺寸。 因此,這個技術,如我所言,並不是現在你所見到,最令人興奮的東西, 可能除了它與眾不同的低價位以外。 這邊真正令人感興趣的是,你能夠用它來做什麼事? 以及你將能夠利用它,所創造出來的介面。現在讓我們來看看。

|

| Tablet shipment continue to grow |

舉例來說,這邊我們有一個岩漿燈軟體。你可以看到, 我可以使用我的雙手,將這些斑點經由擠壓,而使它們變成一團。 我可以像這樣將系統加熱, 或者我可以用我的兩跟手指,將它們分開。 這是完全直覺性的操作,你將不會需要使用手冊。 操控介面似乎就這樣憑空消失了。 這一開始,是我們實驗室的一個博士班學生,所創造的一個類似螢幕保護程式的軟體 他的名字叫做 Ilya Rosenberg. 但是我認為,這個軟體真正的價值,在這邊顯現了。

|

| Wearable shipment forecast |

我將要向你們展示某樣東西,一個比較實際的應用範例,等它載入完成。 這是一個攝影師的燈箱工具應用程式。 再一次地,我可以使用我的兩隻手,來與這些照片互動並移動它們。 但是更酷的是,如果我用兩跟手指, 事實上我可以抓取一張照片,並且非常簡單的將之放大,就像這樣。 我可以任意地拖移、縮放並旋轉這張照片。 我可以使用我的雙手掌來這樣做, 或者,我可以只用我任何一隻手的兩隻手指一起來完成。 如果我抓住整個畫板,我可以做同樣的事,將之放大。 我可以同時操作,現在我一邊抓住這個不放, 然後握住另外一張,把它像這樣放大。

同樣的,在這裡我們也看不到操控介面。 不需要說明書。它的操作結果完全如你所預期, 特別是如果你以前沒有接觸過電腦的話。 現在,如果你想創造一個事業,像是一百美元的筆電, 其實,我對於我們將要向一個全新世代的族群介紹 傳統電腦的視窗滑鼠點擊介面這個想法,有所保留。 多點觸控才是我認為從今爾後,我們所應該與機器互動的真正方法。(掌聲) 多點觸控才是我認為從今爾後,我們所應該與機器互動的真正方法。(掌聲) 當然,我可以在這邊叫出鍵盤。 我可以將我打的字帶出來,將之放在這邊。 很明顯的,這與一般標準鍵盤並無不同, 但是當然,我可以重新縮放這個鍵盤的大小,讓它適合我的雙手使用。 這是非常重要的,因為在今日的科技下,沒有理由 我們應該去適應物理性的裝置。 那將會導致不好的後果,例如:重複性勞損。 今日我們有許多這麼好的科技, 這些操控介面應該開始來適應我們。 直到今日,真正改善我們與介面互動的應用還太少。 直到今日,真正改善我們與介面互動的應用還太少。 這個虛擬鍵盤,事實上可能是一個錯誤的發展方向。 你可以想像,在未來,當我們開始發展這種技術之後, 一個鍵盤,當你移開手的時候,也會自動漂移開來, 而且會非常聰明地預測,你將要用你的手打擊那一顆按鍵。 因此~ 再問一次,很棒吧?

聽眾:你的實驗室在哪裡?

韓傑夫:我是紐約市紐約大學的研究科學家。

這是另一種應用程式的範例。我可以創造出這些小毛球。 它會記憶我所做過的點擊。當然,我可以使用兩手操作。 你會注意到,它具有壓感功能。 但是,真正棒的在這邊,我已經向你們示範過兩指操控手勢, 它能夠讓你很快的放大。因為你不需要事先切換到手掌工具 或者放大鏡工具; 你可以連續地在多個不同的比例上,即時的創造東西,一次完成。 我可以在這邊建造大東西,我也可以回去,非常迅速的回去 回到我一開始的地方,然後甚至在這邊建造更小的東西。

這將會變得非常重要,當我們一旦開始從事像是 資料視覺化的工作。舉例來說,我想我們都非常喜歡 Hans Rosling 的演說, 而且他真正的,著重在強調一個我也已經思考許久的事實, 我們都擁有這些了不起的資料,但是,基於某些原因,它們只是被擺在那邊。 我們並沒有真正的去使用它。我認為其中一個原因就是, 藉由圖像、視覺化和參考工具,可以幫助我們處理這些資料。 但是,很大的一部分,我也著重在開始能夠創建更好的使用者介面, 能夠鑽研深入像這樣的資料,但同時仍能保持對整體的宏觀性。

現在,讓我向你們展示另一個應用程式。這個程式叫做「世界風」。 是太空總署 (NASA) 所研發的。它就像我們都見過的谷歌地球 (Google Earth); 這就好像是它的開源碼版本。有附加檔能夠載入 太空總署經年蒐集的各種資料集。 但是,如你所見,我可以使用同樣的兩指手勢 非常迅速的下潛、進入地球。又一次的,我們看不到操控介面的存在。 這真的能讓所有人,感覺非常地融入那個環境,而且操作就如同你所預期一般, 你能體會嗎?再一次,在這邊你看不到操作介面。介面就這樣消失了。 我可以切換到不同的資料瀏覽。這就是這個應用程式厲害的地方。 就像這樣。太空總署非常的酷。它們有這些超光譜影像 這些影像是人工成色的,對於決定植物的繁茂程度非常有幫助。讓我們回到剛剛那裡。

地圖軟體的偉大之處在於, 它不只是 2D 平面,它也可以是 3D 立體影像。所以再一次,應用多點觸控介面, 你可以使用像這樣的手勢,所以你可以使畫面像這樣傾斜, 你知道。不只是受限於簡單的 2D 平面攀移與移動。 我們已經研發出了這些手勢,像這樣,只要放入你的兩跟手指, 它界定了傾斜的軸線,如此這般我就可以向上或向下任意傾斜。 這是我們在這邊,剛剛想出來的點子, 你知道嗎?也許這不是這樣做最好的方法, 但是使用這種介面,你可以做許多很有趣的事情。 就算你什麼都不做,只是玩玩,也會感覺愉快。(笑聲)

所以,最後一件我想要向你們展示的東西是, 你知道,我確信我們都可以想到很多,你可以利用這個東西所做的 娛樂方面的應用。 我對於我們可以利用這東西,所做的創意性應用更感興趣。 現在,這邊有一個簡單的程式,我可以畫曲線。 當我將曲線封閉起來的時候,它就變成一個人偶。 但是有趣的事情是,我可以增加控制點。 然後我可以用我雙手的手指同時操控它們。 你會注意到它是怎麼做的。 這就像是操控木偶一般,這邊我可以使用 我的十跟手指去畫出並做出玩偶。

事實上,在這表象之下需要很多數學運算, 然後它才能控制這些圖案,並正確的反應。 我的意思是,在這邊這個能夠操控圖案的技術, 並使用多個操控點,事實上是屬於一種尖端科技。 這個技術去年才在計算機圖形學會議上公開, 但這是我真正喜好研究領域的良好範例。 所有這些需要使事情做「對」,背後的電腦運算。 直覺性的事情。做如你所預期一模一樣的事。

多點觸控互動研究,現在在人機介面領域非常地活躍。 我不是唯一一個在做這方面研究的,還有很多其他的人也在這領域。 而這種技術,將會讓更多人加入這個領域的研究, 我真的非常期待,跟在場各位接下來幾天的互動 看看這技術,將能如何應用在你們所處的領域。 謝謝大家。

評論

- 從 Jinha Lee 及 Jeff Han 在人機介面給我們的啟示: 平板電腦、穿載電腦在人機介面仍需要更多創新,台灣應加強平板電腦、穿載電腦人機介面研發,讓創新之附加價值能增加;

- 從TSMC 產業升級半導體技術大幅領先之例子,產業升級、技術升級策略遠比服貿重要,產業升級才能帶來營收大成長,連帶加速產業競爭力,GDP及稅收才會大成長;

Related articles

2013 低價平板電腦、筆電、 iPad mini 大搶上網族市場 ...

2013 低價平板電腦、筆電、 iPad mini 大搶上網族市場 ... 雲端經濟4強決戰- 延申心得( Apple、Google、Amazon ...

雲端經濟4強決戰- 延申心得( Apple、Google、Amazon ... TSMC 半導體製程躍進,對整體產業影響( The ...

TSMC 半導體製程躍進,對整體產業影響( The ... 平板電腦廠商價格戰將加速PC 市場衰退及台灣經濟走 ...

平板電腦廠商價格戰將加速PC 市場衰退及台灣經濟走 ... 由半導體技術、世界級公司策略看IT產業未來變化( IT ...

由半導體技術、世界級公司策略看IT產業未來變化( IT ... 移動應用軟體之商機與台灣的機會- 分析( The Mobile ...

移動應用軟體之商機與台灣的機會- 分析( The Mobile ... Mobile data traffic to grow eight-fold in the US by 2018

Mobile data traffic to grow eight-fold in the US by 2018 抗老化防疾病將成為結合基因工程、智能穿戴型計算之未來產業 - Google Project Caico, foresee its healthcare application with Google Glass

抗老化防疾病將成為結合基因工程、智能穿戴型計算之未來產業 - Google Project Caico, foresee its healthcare application with Google Glass Google 平台策略將使 Google 在智能手機、平板電腦、智能電視、Google 眼鏡、Google 智能手表市場占有率大幅提升

Google 平台策略將使 Google 在智能手機、平板電腦、智能電視、Google 眼鏡、Google 智能手表市場占有率大幅提升 思科預估2013 ~ 2018年全球行動資料傳輸量及行動網路九大趨勢 ( Cisco estimates from 2013 to 2018 the massive growth of global mobile data traffic and 9 trends of mobile Internet )

思科預估2013 ~ 2018年全球行動資料傳輸量及行動網路九大趨勢 ( Cisco estimates from 2013 to 2018 the massive growth of global mobile data traffic and 9 trends of mobile Internet )

2014年4月11日 星期五

美國石油與頁岩天然氣產能創新高,造成未來2016 ~ 2025 影響 - 俄國財政轉弱、美元轉強、天然氣發電效率上升、電動車產業成新主流 ( U.S. oil and shale gas strong production, causing future impact from 2016 to 2025 )

頁岩氣開採進步 美估2037年不需進口原油

美國石油與天然氣探勘井數量年增 80 座,再創新高,根據美國能源資訊署 (EIA) 報告指出,北達科他州與德州油田開採量增加,預估 2037 年美國無再須進口原油。《彭博社》報導,能源資訊署發言人 John Krohn 表示,這是該署在年度能源報告中,「首度」預期對石油進口消費量,可在 23 年後「達到零」。

此份預估報告的難度,在於分析師必須精確算出幾千英呎下,到底容納多少原油、採收技術如何快速進步,以及油價開採是否符合成本。

《FWBP》報導指出,能源顧問 Schork Group Inc 總裁 Stephen Schork 認為,10 年前美國天然氣來源來自進口,「但目前看來用量已可供出口」,在未來幾年內,狀況會如何改變,還有待討論。

根據美國能源資訊署樂觀報告指出,美國國內原油平均日產量 20 年後將增至 1300 萬桶,但若開採技術沒有重大突破,保守預估也會有 1000 萬桶。目前美國石油進口量已從 2006 年的 1300 萬桶,減少至 500 萬桶,一切都得歸功於頁岩氣開採技術大幅進步。

美國頁岩油及頁岩氣對世界之影響越來越大

頁岩油及頁岩氣的廣泛開採和大規模應用,將對現有的以石油和煤炭為主體的能源消費結構帶來巨大衝擊和改變。

美國油消耗下降但美國原油產能增加

頁岩油革命正在重新界定全球能源格局,正如國際能源署在最新的《世界能源展望報告》中所說的那樣,美國能源開發具有深遠意義,北美以外地區和整個能源行業都將能夠感受到其影響。隨著非傳統油氣產量的大增,美國能源產量的增長將加速國際石油貿易轉向,對傳統能源生產國以及由此產生的定價機制都會產生壓力。未來我們將一一感受這些變化。

2017年美國將成最大產油國

國際能源署去年11月發布的報告預計,美國將在2017年取代沙特成為全球最大的產油國,該組織還預測美國距離實現能源自給自足的目標已經很近,而這在之前是不可想象的。國際能源署的此番預測與其之前發布的報告形成鮮明對比,此前的報告稱,沙特將保持全球最大產油國地位直至2035年。

國際能源署預計,美國石油進口將持續下降,北美將在2030年左右成為石油凈出口地區,而美國將在2035年左右基本實現能源自給自足。“美國目前大約20%的能源需求依靠進口,但以凈進口量計算幾乎達到自給自足的程度,這與其他多數能源進口國呈現的趨勢迥然不同。”

這份報告表示,美國到2015年將以較大的優勢超越俄羅斯,成為全球最大的天然氣生產國,到2017年成為全球最大的石油生產國。隨著國內的廉價供應激發工業和發電行業的需求,美國到2035年對天然氣的依賴將超過石油或煤炭。( 註:一旦, 美國石油及天然氣都成為全世界主控者, 蘇俄能源出口將受打擊經濟也受害, 那時蘇俄又要進口許多糧食, 我懷疑俄侵略烏克蘭極可能是一種戰略, 因為蘇俄許多糧食穀物進口來自烏克蘭 )。

未來交通電力時代將因廉價之電力、電池及充電技術而啟動

Elon Musk: The Future Is Fully Electric

Interviewed at The New York Times's Dealbook Conference today by Andrew Ross Sorkin, who repeatedly questioned Musk, the CEO of Tesla Motors, about three recent fires in Tesla automobiles, Musk largely shrugged off headlines on the accidents as "misleading" and the number as statistically insignificant. He said no Tesla recall is in the near future.

In the more distant future, he had broader predictions.

"I feel confident in predicting the long term that all transport will be electronic," said Musk, who is also founder and CTO of SpaceX, the space rocket company that's contracting with NASA. He paused slightly. "With the ironic exception of rockets."

Musk says the future of the country's ground transportation will be fully electric, powered by efficient batteries, and that "we are going to look back on this era like we do on the steam engine."

"It's quaint," he said. "We should have a few of them around in a museum somewhere, but not drive them."

Whatever happened to flying cars?

"I kind of like the idea of flying cars on the one hand, but it may not be what people want," he said, adding that noise pollution could be an issue--as might interfering with sight-lines of city skylines.

Extending electric transportation to the skies, though, might be possible, and--yes--Musk even has a plan for it.

"I do think there's a lot of possibility in creating a vertical-takeoff supersonic transport jet. It could come from a startup," Musk said, admitting that if he has another company in the future, "which will happen no time soon," he'd be open to building electric supersonic aircrafts. He even has a design in mind--inspired by the Concorde.

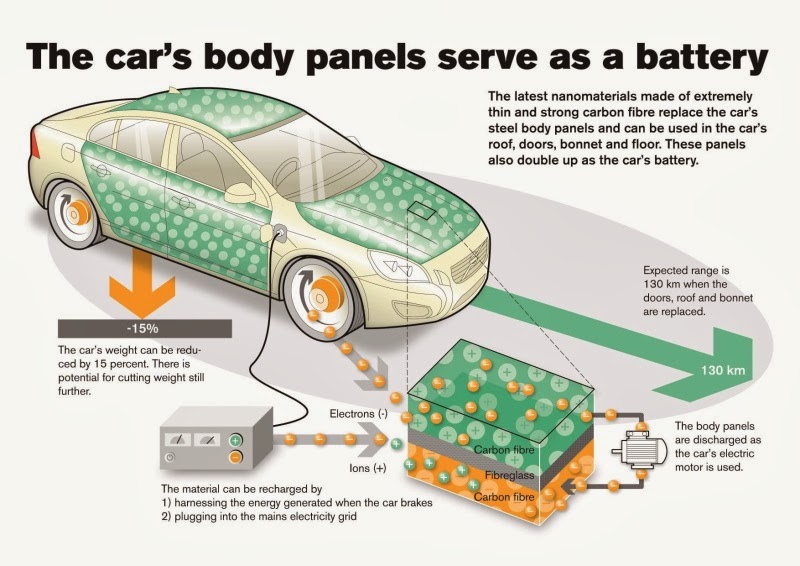

Volvo Develops Battery Technology Built Into Body Panels ( 新的節能車蓋念 )

一種采用超微細材料的新型電池,已剛剛問世兩年就受到全球的高度重視,那就是納米碳纖素電池。這種電池采用納米碳管制成纖維,再制相應的編織布,經處理後可制電池的正、負極板。電鋅液為無水有機高分子電解液,能制成容量大、單體電壓高的電池(3.8V),而比能量可達230wh/kg以上。充放電次數1000次至1200次,采用這種碳纖素材料的表面積比可達2000平方米/克,所以納米碳纖素電池體積特別小,只有普通鉛酸電池的1/16,重量是其的1/7—1/10,而比能量是近世出現的锂電池的近兩倍,而且取材方便。

納米碳纖素電池體積小、重量輕、能量大,可廣泛應用于微電子學上,外徑為1mm、長度3mm的貼片電池可廣泛應用于電子手表、無線電收音機、電視、電子儀器、通訊、手機BP機上,可替代許多電解電容器,而體積大大縮小。美國近期制成的能放在人體血管裏的超微型馬達,裝上納米碳電池,可疏通人體血管裏的腦血栓。一只微型納米碳電池可做成0.6mm大小,和計算器上的相結合,就成為太陽能儲備電池。可以這樣預計,納米級電池在電子上的應用兩年內可達上萬億美元。 ( 註:目前近量產納米碳纖素電池體積小、重量輕、能量大約鋰離子電池三倍容量,一個 2000 mAh 納米碳纖素電池充電電流可高達 15A ~ 20A,因此最快可在6分鐘充飽,將使電動車超越汽車成為全球最大產業 )

分析

|

| 美國頁岩天然氣超有競爭力, 將影響整個 能源使用比重, 甚致將大幅讓美國能源使用 方式改變 |

美國石油與天然氣探勘井數量年增 80 座,再創新高,根據美國能源資訊署 (EIA) 報告指出,北達科他州與德州油田開採量增加,預估 2037 年美國無再須進口原油。《彭博社》報導,能源資訊署發言人 John Krohn 表示,這是該署在年度能源報告中,「首度」預期對石油進口消費量,可在 23 年後「達到零」。

此份預估報告的難度,在於分析師必須精確算出幾千英呎下,到底容納多少原油、採收技術如何快速進步,以及油價開採是否符合成本。

《FWBP》報導指出,能源顧問 Schork Group Inc 總裁 Stephen Schork 認為,10 年前美國天然氣來源來自進口,「但目前看來用量已可供出口」,在未來幾年內,狀況會如何改變,還有待討論。

根據美國能源資訊署樂觀報告指出,美國國內原油平均日產量 20 年後將增至 1300 萬桶,但若開採技術沒有重大突破,保守預估也會有 1000 萬桶。目前美國石油進口量已從 2006 年的 1300 萬桶,減少至 500 萬桶,一切都得歸功於頁岩氣開採技術大幅進步。

美國頁岩油及頁岩氣對世界之影響越來越大

頁岩油及頁岩氣的廣泛開採和大規模應用,將對現有的以石油和煤炭為主體的能源消費結構帶來巨大衝擊和改變。

美國油消耗下降但美國原油產能增加

頁岩油革命正在重新界定全球能源格局,正如國際能源署在最新的《世界能源展望報告》中所說的那樣,美國能源開發具有深遠意義,北美以外地區和整個能源行業都將能夠感受到其影響。隨著非傳統油氣產量的大增,美國能源產量的增長將加速國際石油貿易轉向,對傳統能源生產國以及由此產生的定價機制都會產生壓力。未來我們將一一感受這些變化。

2017年美國將成最大產油國

|

| 這麼有競爭力之美國天然氣未來將出口 |

國際能源署預計,美國石油進口將持續下降,北美將在2030年左右成為石油凈出口地區,而美國將在2035年左右基本實現能源自給自足。“美國目前大約20%的能源需求依靠進口,但以凈進口量計算幾乎達到自給自足的程度,這與其他多數能源進口國呈現的趨勢迥然不同。”

這份報告表示,美國到2015年將以較大的優勢超越俄羅斯,成為全球最大的天然氣生產國,到2017年成為全球最大的石油生產國。隨著國內的廉價供應激發工業和發電行業的需求,美國到2035年對天然氣的依賴將超過石油或煤炭。( 註:一旦, 美國石油及天然氣都成為全世界主控者, 蘇俄能源出口將受打擊經濟也受害, 那時蘇俄又要進口許多糧食, 我懷疑俄侵略烏克蘭極可能是一種戰略, 因為蘇俄許多糧食穀物進口來自烏克蘭 )。

未來交通電力時代將因廉價之電力、電池及充電技術而啟動

Elon Musk: The Future Is Fully Electric

Interviewed at The New York Times's Dealbook Conference today by Andrew Ross Sorkin, who repeatedly questioned Musk, the CEO of Tesla Motors, about three recent fires in Tesla automobiles, Musk largely shrugged off headlines on the accidents as "misleading" and the number as statistically insignificant. He said no Tesla recall is in the near future.

In the more distant future, he had broader predictions.

"I feel confident in predicting the long term that all transport will be electronic," said Musk, who is also founder and CTO of SpaceX, the space rocket company that's contracting with NASA. He paused slightly. "With the ironic exception of rockets."

Musk says the future of the country's ground transportation will be fully electric, powered by efficient batteries, and that "we are going to look back on this era like we do on the steam engine."

"It's quaint," he said. "We should have a few of them around in a museum somewhere, but not drive them."

Whatever happened to flying cars?

"I kind of like the idea of flying cars on the one hand, but it may not be what people want," he said, adding that noise pollution could be an issue--as might interfering with sight-lines of city skylines.

Extending electric transportation to the skies, though, might be possible, and--yes--Musk even has a plan for it.

|

| major blocking issue to e-Car is battery, charging time and cost |

( 註:美國大部份的電力來自於燃燒石油燃料。 那麼需要插電的電動車如何產生效益呢? 如果我們在通用電力公司(General Electric) 現代化的天然氣發電渦輪燃燒, 如果我們在通用電力公司(General Electric) 現代化的天然氣發電渦輪燃燒, 我們會得到百分之六十的能源效率。 如果同樣的能源我們在汽車的內燃機燃燒, 我們只能得到百分之二十的能源效率。 而原因在於,我們在發電廠中可以提供 非常多提升燃料價值的方法。 而原因在於,我們在發電廠中可以提供 非常多提升燃料價值的方法。 而原因在於,我們在發電廠中可以提供 非常多提升燃料價值的方法。 我們還可以將浪費掉的熱能 重新送入蒸氣渦輪並成為二度電力的來源。 重新送入蒸氣渦輪並成為二度電力的來源。 因此,當我們採取了任何降低(能量)傳輸的所有手段, 即便是使用相同來源的燃料,在發電廠產電燃後 用來充電動車,我們都可以得到兩倍以上的好處。 )

Volvo Develops Battery Technology Built Into Body Panels ( 新的節能車蓋念 )

A research project funded by the European Union has developed a revolutionary, lightweight structural energy storage component that could be used in future electrified vehicles. There were 8 major participants with Imperial College London ICL United Kingdom as project leader. The other participant were: Swedish companies Volvo Car Group, Swerea Sicomp AB, ETC Battery and FuelCells, and Chalmers (Swedish Hybrid Centre), Bundesanstalt für Materialforschung und-prüfung BAM, of Germany, Greek company Inasco, Cytec Industries also of the United Kingdom, and Nanocyl, of Belgium. Also known as NCYL.

The battery components are moulded from materials consisting of carbon fiber in a polymer resin, nano-structured batteries and super capacitors. Volvo says the result is an eco-friendly and cost effective structure that will substantially cut vehicle weight and volume. In fact, by completely substituting an electric car’s existing components with the new material, overall vehicle weight will be reduced by more than 15 percent.

According to Volvo, reinforced carbon fibers are first sandwiched into laminate layers with the new battery. The laminate is then shaped and cured in an oven to set and harden. The super capacitors are integrated within the component skin. This material can then be used around the vehicle, replacing existing components such as door panels and trunk lids to store and charge energy.

Doors, fenders and trunk lids made of the laminate actually server a dual purpose. They’re lighter and save volume and weight. At the same time they function as electrically powered storage components and have the potential to replace standard batteries currently used in cars. The project promises to make conventional batteries a thing of the past.

Under the hood, Volvo wanted to show that the plenum replacement bar is not only capable of replacing a 12 volt system; it can also save more than 50 percent in weight. This new technology could be applied to both electric and standard cars and used by other manufacturers.

納米炭纖素高能蓄電池發展前景看好

汽車大廠福特近年來在汽車科技上不斷嘗試突破,先前《科技新報》報導福特試驗以光雷達(LiDAR)自動駕駛原型車,在 1 月的 CES 大展上,福特又有創新之舉,與專供高轉換率太陽能電池的 SunPower 合作,推出太陽能充電電動車的原型車。

以往我們常看到各種太陽能車的比賽,但車體都要極度的輕量化,只能塞進一個人,而車頂則要盡量延展,增加受光面積,而變得奇形怪狀,但是福特的 C-MAX Solar Energi 太陽能充電概念原型車,卻是跟一般汽車沒兩樣,只有車頂裝上 SunPower 的 X21 高效能太陽能電池。太陽能轉換率再高,這樣小的面積不也是聊勝於無嗎?

福特想到一個聰明的解決辦法,就是推出聚光車棚,與一般的棚子構造一樣,只不過棚面換成聚光透明材質,因此成本不高,當車子停在聚光車棚底下,車棚能把更大面積的太陽光聚光到較小的車頂太陽能電池上,因此提高了充電效率,而福特還內建自動駕駛系統,讓車子能自動對準陽光聚焦處,如此一來,太陽能充電 6 小時,可以行駛 21 英哩,約 33.8 公里。

|

| Sun energy green car will happen before 2016 |

C-MAX Solar Energi 是改裝自 C-MAX Energi 插電式油電混合車,因此萬一太陽下山車子又沒電了,還是可靠汽油開回家,但是若未來廣設太陽能充電聚光車棚,停車購物時,就停在聚光車棚底下充電,不僅省油,還沒有電費開支,福特估計,這樣一來,可以減少充電的電力消費高達 75%。

而聚光車棚也比一般充電站更容易推廣,目前電動車面臨充電站不足的困境,但要廣設充電站,則受到許多阻礙,包括商業上的困難,如擁有 12450 座充電站的 ECOtality 於 2013 年 10 月破產,之後由 Car Charging Group 接手,NRG Energy 要廣設充電站的計畫也遇上許多障礙,原本計畫 2013 年底裝設超過 1000 座充電站,結果只裝了 110 座,達成率才 10%。

對汽車大廠來說,缺乏充電站自然打擊電動車的發展,但是充電站有高額的固定投資問題,與電網的連結與收費等等考量,要推動不是一時三刻能辦到,與其等待,不如乾脆跳過這個環節。

一種采用超微細材料的新型電池,已剛剛問世兩年就受到全球的高度重視,那就是納米碳纖素電池。這種電池采用納米碳管制成纖維,再制相應的編織布,經處理後可制電池的正、負極板。電鋅液為無水有機高分子電解液,能制成容量大、單體電壓高的電池(3.8V),而比能量可達230wh/kg以上。充放電次數1000次至1200次,采用這種碳纖素材料的表面積比可達2000平方米/克,所以納米碳纖素電池體積特別小,只有普通鉛酸電池的1/16,重量是其的1/7—1/10,而比能量是近世出現的锂電池的近兩倍,而且取材方便。

|

| US Dollar Index will surge up due to US energy import decreasing and deficit decrease |

納米碳纖素電池體積小、重量輕、能量大,可廣泛應用于微電子學上,外徑為1mm、長度3mm的貼片電池可廣泛應用于電子手表、無線電收音機、電視、電子儀器、通訊、手機BP機上,可替代許多電解電容器,而體積大大縮小。美國近期制成的能放在人體血管裏的超微型馬達,裝上納米碳電池,可疏通人體血管裏的腦血栓。一只微型納米碳電池可做成0.6mm大小,和計算器上的相結合,就成為太陽能儲備電池。可以這樣預計,納米級電池在電子上的應用兩年內可達上萬億美元。 ( 註:目前近量產納米碳纖素電池體積小、重量輕、能量大約鋰離子電池三倍容量,一個 2000 mAh 納米碳纖素電池充電電流可高達 15A ~ 20A,因此最快可在6分鐘充飽,將使電動車超越汽車成為全球最大產業 )

分析

- 美國供給將大增,全面改寫全球天然氣生態,全球最大的天然氣生產國已經由美國取代俄羅斯,這當然主要是受惠於從油頁岩開採天然氣的技術提升。未來,考量到俄國財政嚴重仰賴油氣收入,在石油暴利不再(開採成本高/油價穩定)和天然氣前景堪慮下,並不是理想的投資標的。

- 2014 ~ 2020 電動車、電動巴士將大量普及 - 三種趨勢:奈米碳纖素電池、碳纖素表面電池車體、高速充電技術、高效能現代化天然氣發電,預估能源使用效率將是現在的 2.5 ~ 3 倍,美國極可能成為全球最大電動汽車國;

- 若結合聚光車棚車頂、太陽能電池,太陽能高效能現代化的電動車,預估能源使用效率將是現在的 4 ~ 6 倍;

- 從縮QE至美元進入強勢,2020 可以開始出口能源,進口能源降至最低,美元也將進入超強勢時代,美國的重新富強將再現;

- 2016 ~ 2025 影響 :俄國財政轉弱、美元轉強、天然氣發電效率上升、電動車產業成新主流;

- 由於奈米碳纖素電池、碳纖素表面電池車體、高速充電技術、更高效能現代化天然氣發電機都將在2016有大突破,台灣應該從半導體到電機科技趕上這一波商機,才是產業升級,它的重要性遠高於FTA及服貿;

- 《Reinventing Fire》(重燃火源)這書指出:這本開拓性的商業書籍指出美國未來40年如何成功地由石油和煤炭轉換成乾淨高效率能源,結合交通運輸業,建築業,工業和電力市場主導型解決方案和同行評審分析綜合報告。它制定了各種方案和競爭策略,為了2050年超出158%的美國無石油,無煤,無核能,三分之一的天然氣和新能源發明的經濟需求。

Related articles

2013 低價平板電腦、筆電、 iPad mini 大搶上網族市場 ...

2013 低價平板電腦、筆電、 iPad mini 大搶上網族市場 ... 雲端經濟4強決戰- 延申心得( Apple、Google、Amazon ...

雲端經濟4強決戰- 延申心得( Apple、Google、Amazon ... TSMC 半導體製程躍進,對整體產業影響( The ...

TSMC 半導體製程躍進,對整體產業影響( The ... 平板電腦廠商價格戰將加速PC 市場衰退及台灣經濟走 ...

平板電腦廠商價格戰將加速PC 市場衰退及台灣經濟走 ... 由半導體技術、世界級公司策略看IT產業未來變化( IT ...

由半導體技術、世界級公司策略看IT產業未來變化( IT ... 移動應用軟體之商機與台灣的機會- 分析( The Mobile ...

移動應用軟體之商機與台灣的機會- 分析( The Mobile ... 2013 底至2014 QE緩步退場計劃,是否啟動美股景氣行情及新興市場災難?

2013 底至2014 QE緩步退場計劃,是否啟動美股景氣行情及新興市場災難? 抗老化防疾病將成為結合基因工程、智能穿戴型計算之未來產業 - Google Project Caico, foresee its healthcare application with Google Glass

抗老化防疾病將成為結合基因工程、智能穿戴型計算之未來產業 - Google Project Caico, foresee its healthcare application with Google Glass Google 平台策略將使 Google 在智能手機、平板電腦、智能電視、Google 眼鏡、Google 智能手表市場占有率大幅提升

Google 平台策略將使 Google 在智能手機、平板電腦、智能電視、Google 眼鏡、Google 智能手表市場占有率大幅提升 思科預估2013 ~ 2018年全球行動資料傳輸量及行動網路九大趨勢 ( Cisco estimates from 2013 to 2018 the massive growth of global mobile data traffic and 9 trends of mobile Internet )

思科預估2013 ~ 2018年全球行動資料傳輸量及行動網路九大趨勢 ( Cisco estimates from 2013 to 2018 the massive growth of global mobile data traffic and 9 trends of mobile Internet )

訂閱:

文章 (Atom)